CCDAK Confluent Certified Developer for Apache Kafka Certification Examination Free Practice Exam Questions (2026 Updated)

Prepare effectively for your Confluent CCDAK Confluent Certified Developer for Apache Kafka Certification Examination certification with our extensive collection of free, high-quality practice questions. Each question is designed to mirror the actual exam format and objectives, complete with comprehensive answers and detailed explanations. Our materials are regularly updated for 2026, ensuring you have the most current resources to build confidence and succeed on your first attempt.

You are writing to a topic with acks=all.

The producer receives acknowledgments but you notice duplicate messages.

You find that timeouts due to network delay are causing resends.

Which configuration should you use to prevent duplicates?

Which configuration allows more time for the consumer poll to process records?

The producer code below features a Callback class with a method called onCompletion().

In the onCompletion() method, when the request is completed successfully, what does the value metadata.offset() represent?

An application is consuming messages from Kafka.

The application logs show that partitions are frequently being reassigned within the consumer group.

Which two factors may be contributing to this?

(Select two.)

What is accomplished by producing data to a topic with a message key?

You are working on a Kafka cluster with three nodes. You create a topic named orders with:

replication.factor = 3

min.insync.replicas = 2

acks = allWhat exception will be generated if two brokers are down due to network delay?

Which two statements are correct about transactions in Kafka?

(Select two.)

(Which configuration is valid for deploying a JDBC Source Connector to read all rows from the orders table and write them to the dbl-orders topic?)

(You have a Kafka Connect cluster with multiple connectors deployed.

One connector is not working as expected.

You need to find logs related to that specific connector to investigate the issue.

How can you find the connector’s logs?)

(Your configuration parameters for a Source connector and Connect worker are:

• offset.flush.interval.ms=60000

• offset.flush.timeout.ms=500

• offset.storage.topic=connect-offsets

• offset.storage.replication.factor=-1

Which two statements match the expected behavior?

Select two.)

You have a topic t1 with six partitions. You use Kafka Connect to send data from topic t1 in your Kafka cluster to Amazon S3. Kafka Connect is configured for two tasks.

How many partitions will each task process?

Clients that connect to a Kafka cluster are required to specify one or more brokers in the bootstrap.servers parameter.

What is the primary advantage of specifying more than one broker?

You are creating a Kafka Streams application to process retail data.

Match the input data streams with the appropriate Kafka Streams object.

You are sending messages to a Kafka cluster in JSON format and want to add more information related to each message:

Format of the message payload

Message creation time

A globally unique identifier that allows the message to be traced through the systemWhere should this additional information be set?

You have a Kafka client application that has real-time processing requirements.

Which Kafka metric should you monitor?

Which partition assignment minimizes partition movements between two assignments?

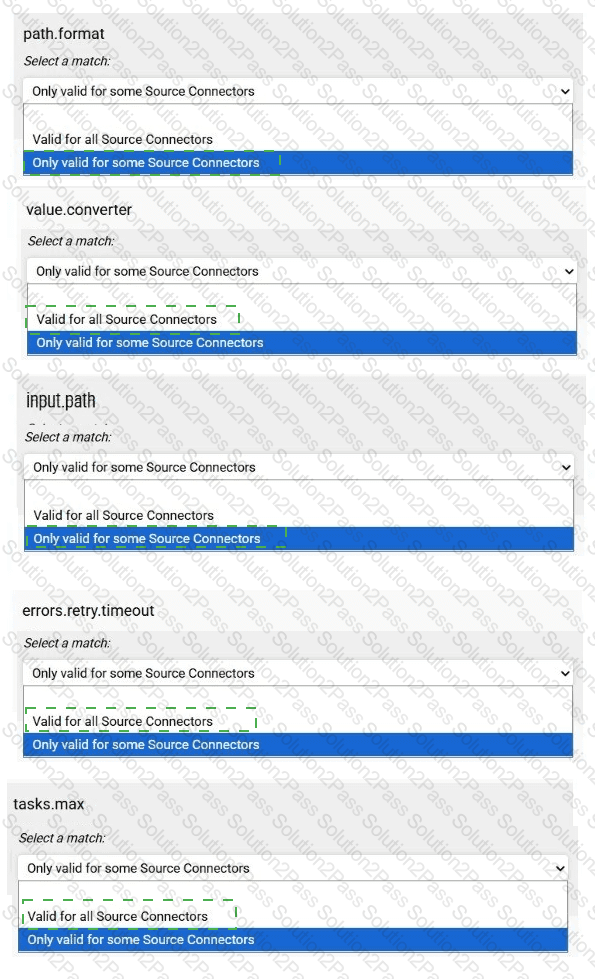

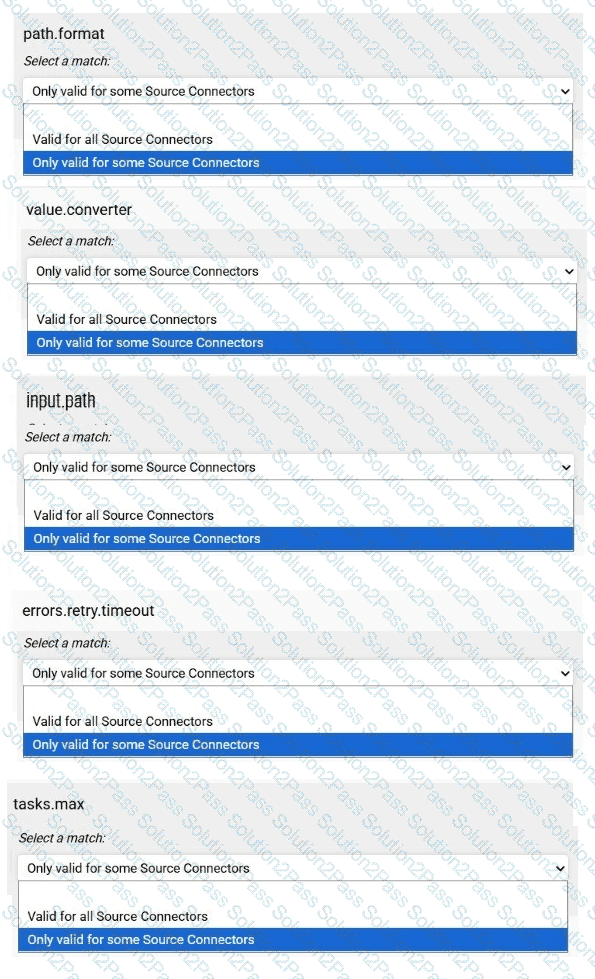

Match each configuration parameter with the correct option.

To answer choose a match for each option from the drop-down. Partial

credit is given for each correct answer.

You want to connect with username and password to a secured Kafka cluster that has SSL encryption.

Which properties must your client include?

You need to consume messages from Kafka using the command-line interface (CLI).

Which command should you use?

You need to correctly join data from two Kafka topics.

Which two scenarios will allow for co-partitioning?

(Select two.)