DP-700 Microsoft Implementing Data Engineering Solutions Using Microsoft Fabric Free Practice Exam Questions (2026 Updated)

Prepare effectively for your Microsoft DP-700 Implementing Data Engineering Solutions Using Microsoft Fabric certification with our extensive collection of free, high-quality practice questions. Each question is designed to mirror the actual exam format and objectives, complete with comprehensive answers and detailed explanations. Our materials are regularly updated for 2026, ensuring you have the most current resources to build confidence and succeed on your first attempt.

You need to ensure that the data analysts can access the gold layer lakehouse.

What should you do?

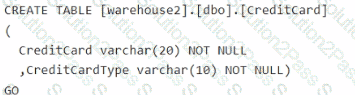

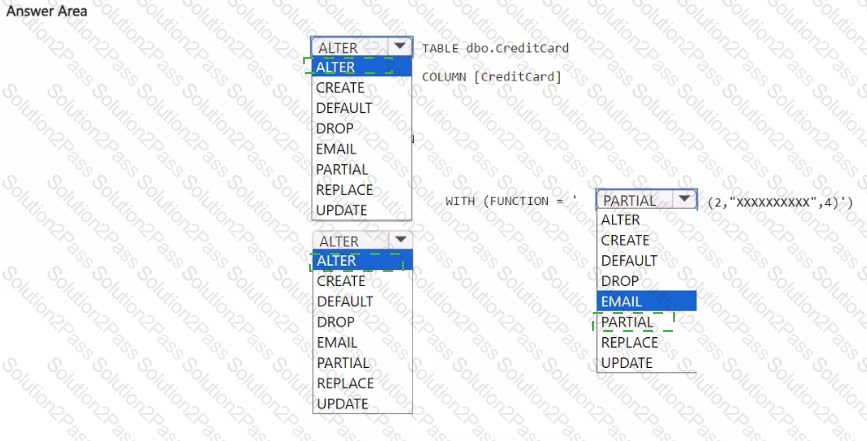

You have a Fabric workspace named Workspace1 that contains a warehouse named Warehouse2. A team of data analysts has Viewer role access to Workspace1. You create a table by running the following statement.

You need to ensure that the team can view only the first two characters and the last four characters of the Creditcard attribute.

How should you complete the statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to create a workflow for the new book cover images.

Which two components should you include in the workflow? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

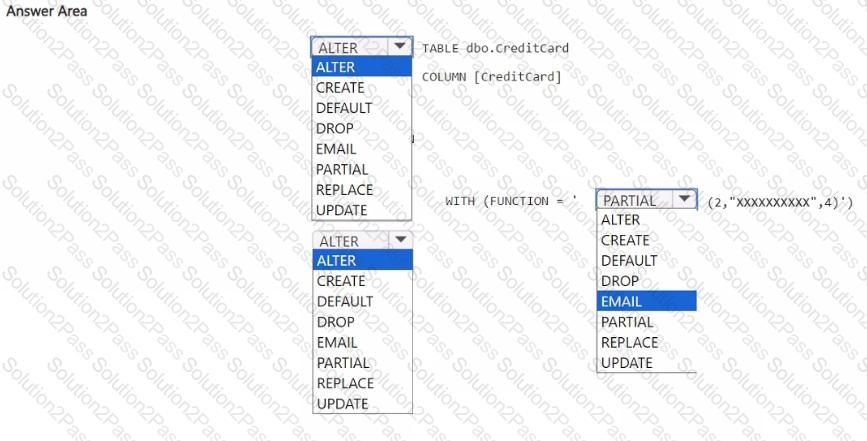

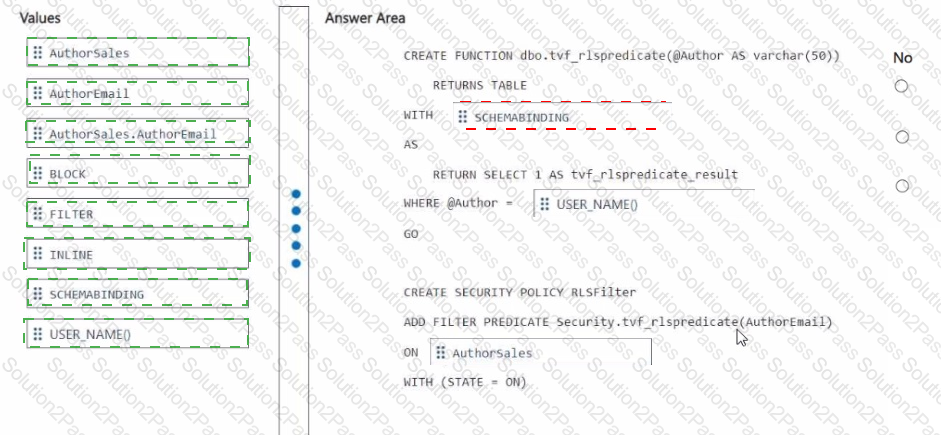

You need to ensure that the authors can see only their respective sales data.

How should you complete the statement? To answer, drag the appropriate values the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content

NOTE: Each correct selection is worth one point.

You need to resolve the sales data issue. The solution must minimize the amount of data transferred.

What should you do?

What should you do to optimize the query experience for the business users?

You need to ensure that processes for the bronze and silver layers run in isolation How should you configure the Apache Spark settings?

HOTSPOT

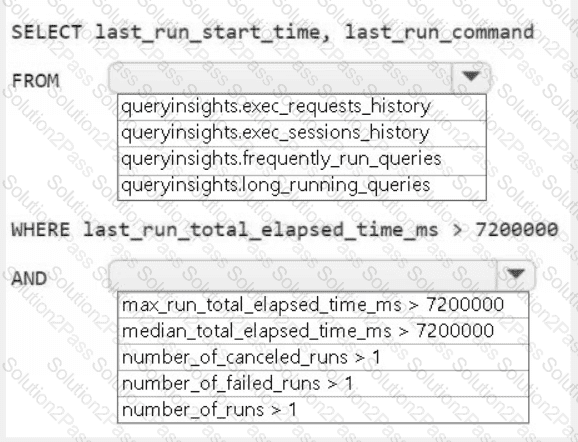

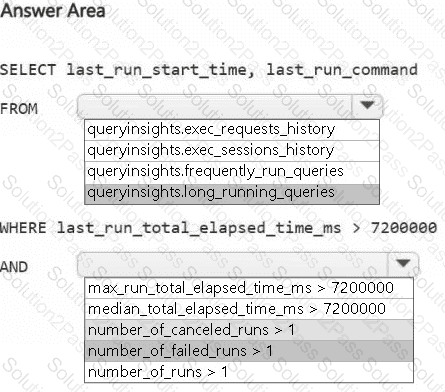

You need to troubleshoot the ad-hoc query issue.

How should you complete the statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to implement the solution for the book reviews.

Which should you do?

You need to ensure that WorkspaceA can be configured for source control. Which two actions should you perform?

Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

You need to ensure that usage of the data in the Amazon S3 bucket meets the technical requirements.

What should you do?

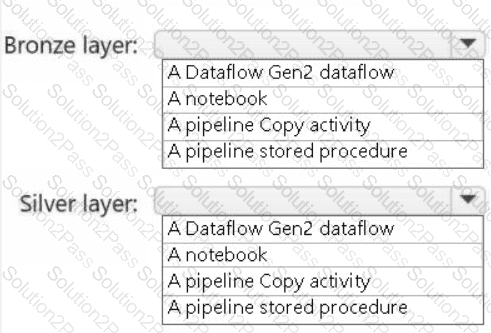

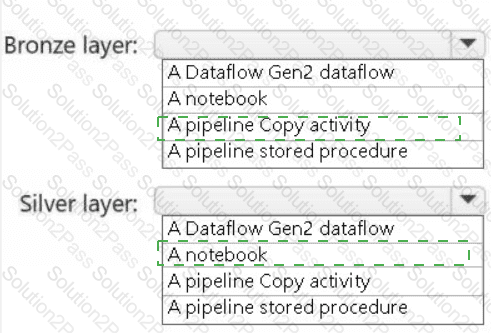

You need to recommend a method to populate the POS1 data to the lakehouse medallion layers.

What should you recommend for each layer? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

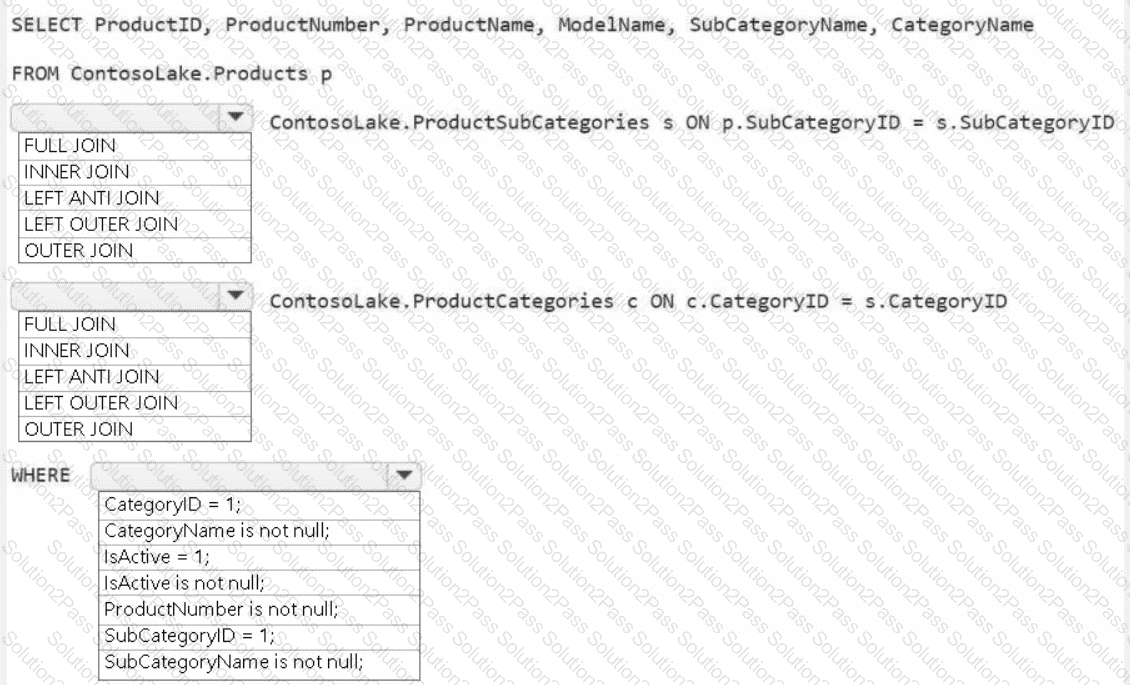

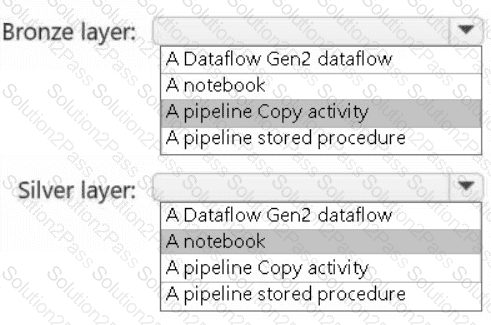

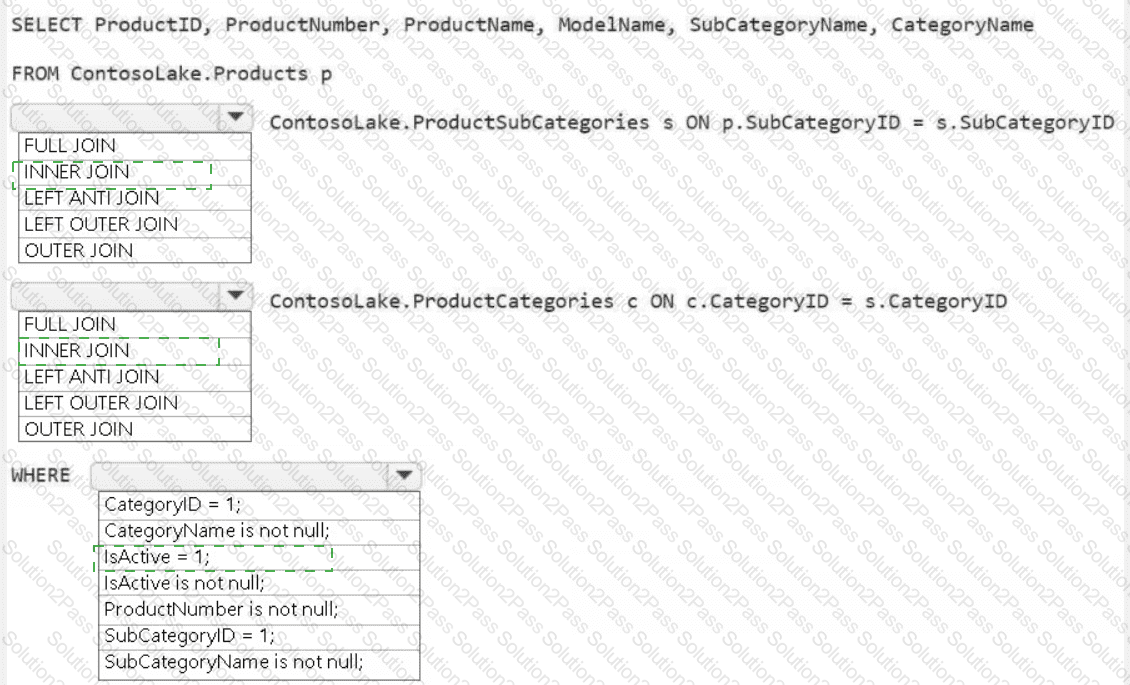

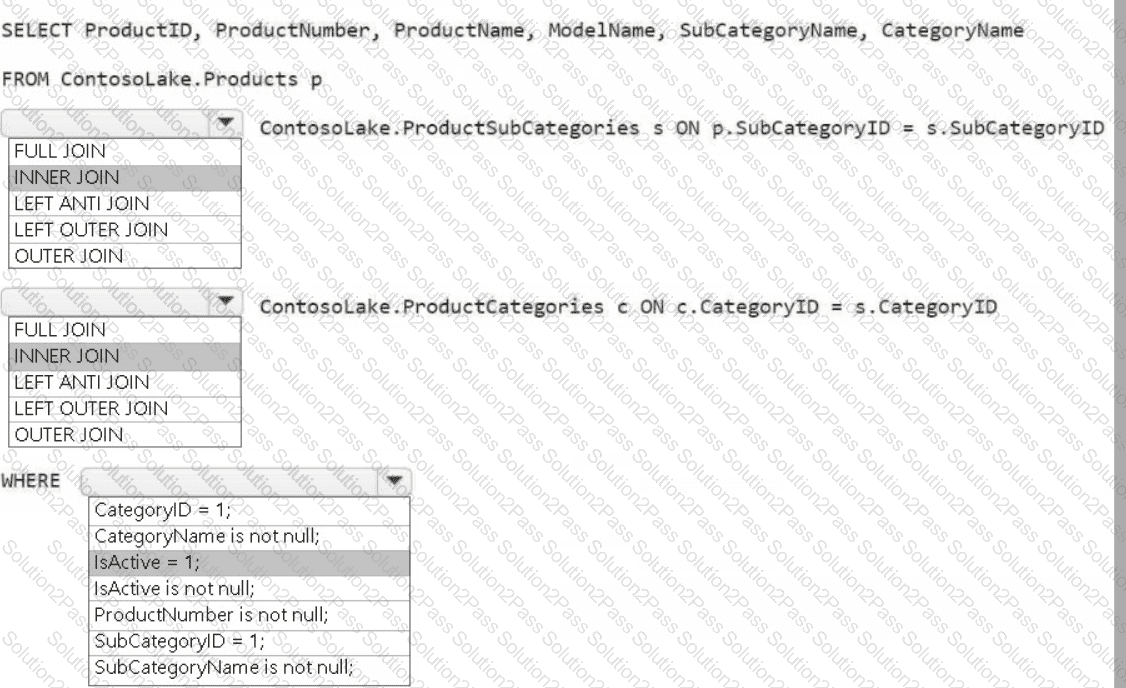

You need to create the product dimension.

How should you complete the Apache Spark SQL code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

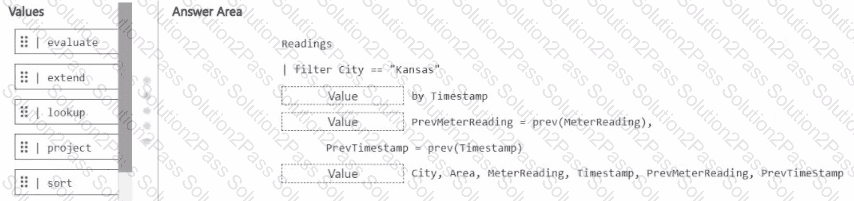

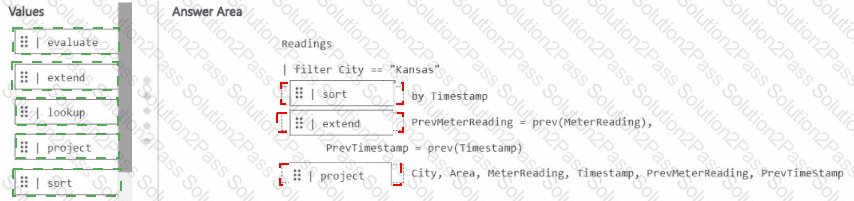

You have a KQL database that contains a table named Readings.

You need to build a KQL query to compare the Meter-Reading value of each row to the previous row base on the ilmestamp value

A sample of the expected output is shown in the following table.

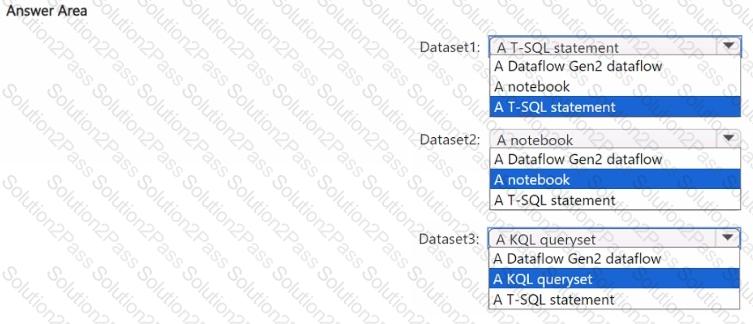

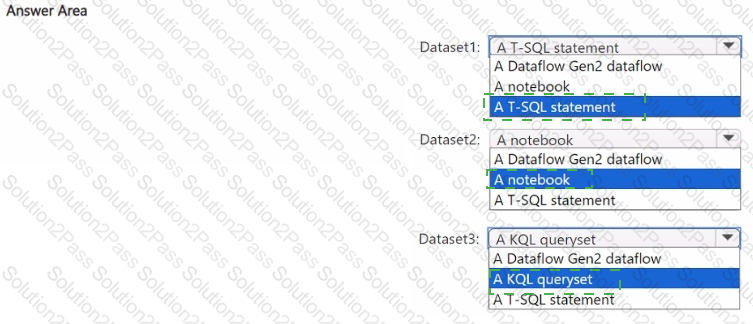

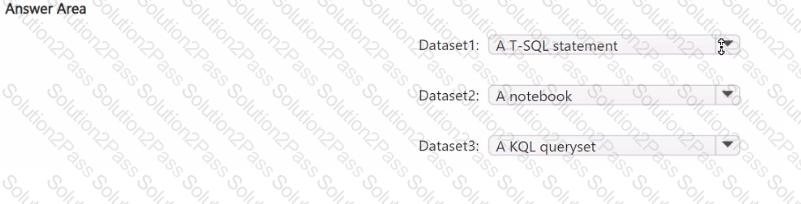

You plan to process the following three datasets by using Fabric:

• Dataset1: This dataset will be added to Fabric and will have a unique primary key between the source and the destination. The unique primary key will be an integer and will start from 1 and have an increment of 1.

• Dataset2: This dataset contains semi-structured data that uses bulk data transfer. The dataset must be handled in one process between the source and the destination. The data transformation process will include the use of custom visuals to understand and work with the dataset in development mode.

• Dataset3. This dataset is in a takehouse. The data will be bulk loaded. The data transformation process will include row-based windowing functions during the loading process.

You need to identify which type of item to use for the datasets. The solution must minimize development effort and use built-in functionality, when possible. What should you identify for each dataset? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You have a Fabric workspace.

You have semi-structured data.

You need to read the data by using T-SQL, KQL, and Apache Spark. The data will only be written by using Spark.

What should you use to store the data?

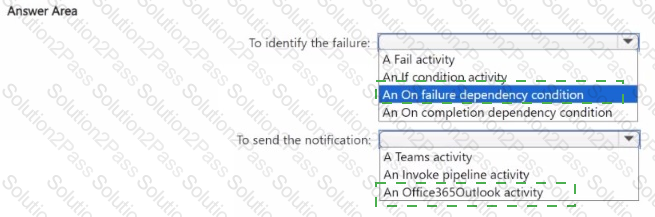

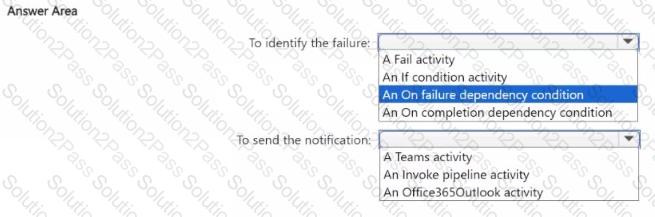

You need to ensure that the data engineers are notified if any step in populating the lakehouses fails. The solution must meet the technical requirements and minimize development effort.

What should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to populate the MAR1 data in the bronze layer.

Which two types of activities should you include in the pipeline? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

You need to recommend a solution to resolve the MAR1 connectivity issues. The solution must minimize development effort. What should you recommend?

You need to schedule the population of the medallion layers to meet the technical requirements.

What should you do?

A screenshot of a computer Description automatically generated

A screenshot of a computer Description automatically generated

A screenshot of a computer Description automatically generated

A screenshot of a computer Description automatically generated

A screenshot of a computer Description automatically generated

A screenshot of a computer Description automatically generated

C:\Users\Waqas Shahid\Desktop\Mudassir\Untitled.jpg

C:\Users\Waqas Shahid\Desktop\Mudassir\Untitled.jpg