ARA-C01 Snowflake SnowPro Advanced: Architect Certification Exam Free Practice Exam Questions (2026 Updated)

Prepare effectively for your Snowflake ARA-C01 SnowPro Advanced: Architect Certification Exam certification with our extensive collection of free, high-quality practice questions. Each question is designed to mirror the actual exam format and objectives, complete with comprehensive answers and detailed explanations. Our materials are regularly updated for 2026, ensuring you have the most current resources to build confidence and succeed on your first attempt.

A company has a source system that provides JSON records for various loT operations. The JSON Is loading directly into a persistent table with a variant field. The data Is quickly growing to 100s of millions of records and performance to becoming an issue. There is a generic access pattern that Is used to filter on the create_date key within the variant field.

What can be done to improve performance?

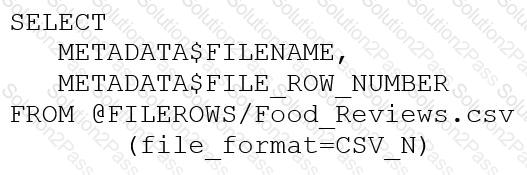

An Architect runs the following SQL query:

How can this query be interpreted?

A company is using a Snowflake account in Azure. The account has SAML SSO set up using ADFS as a SCIM identity provider. To validate Private Link connectivity, an Architect performed the following steps:

* Confirmed Private Link URLs are working by logging in with a username/password account

* Verified DNS resolution by running nslookups against Private Link URLs

* Validated connectivity using SnowCD

* Disabled public access using a network policy set to use the company’s IP address range

However, the following error message is received when using SSO to log into the company account:

IP XX.XXX.XX.XX is not allowed to access snowflake. Contact your local security administrator.

What steps should the Architect take to resolve this error and ensure that the account is accessed using only Private Link? (Choose two.)

What does a Snowflake Architect need to consider when implementing a Snowflake Connector for Kafka?

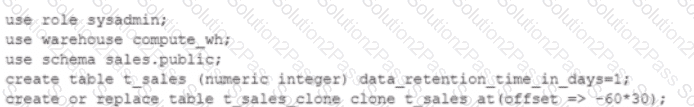

A user is executing the following command sequentially within a timeframe of 10 minutes from start to finish:

What would be the output of this query?

A company's Architect needs to find an efficient way to get data from an external partner, who is also a Snowflake user. The current solution is based on daily JSON extracts that are placed on an FTP server and uploaded to Snowflake manually. The files are changed several times each month, and the ingestion process needs to be adapted to accommodate these changes.

What would be the MOST efficient solution?

An Architect is designing a solution that will be used to process changed records in an orders table. Newly-inserted orders must be loaded into the f_orders fact table, which will aggregate all the orders by multiple dimensions (time, region, channel, etc.). Existing orders can be updated by the sales department within 30 days after the order creation. In case of an order update, the solution must perform two actions:

1. Update the order in the f_0RDERS fact table.

2. Load the changed order data into the special table ORDER _REPAIRS.

This table is used by the Accounting department once a month. If the order has been changed, the Accounting team needs to know the latest details and perform the necessary actions based on the data in the order_repairs table.

What data processing logic design will be the MOST performant?

What are characteristics of the use of transactions in Snowflake? (Select TWO).

How is the change of local time due to daylight savings time handled in Snowflake tasks? (Choose two.)

An Architect on a new project has been asked to design an architecture that meets Snowflake security, compliance, and governance requirements as follows:

1) Use Tri-Secret Secure in Snowflake

2) Share some information stored in a view with another Snowflake customer

3) Hide portions of sensitive information from some columns

4) Use zero-copy cloning to refresh the non-production environment from the production environment

To meet these requirements, which design elements must be implemented? (Choose three.)

How does a standard virtual warehouse policy work in Snowflake?

Company A would like to share data in Snowflake with Company B. Company B is not on the same cloud platform as Company A.

What is required to allow data sharing between these two companies?

Which of the following are characteristics of how row access policies can be applied to external tables? (Choose three.)

Which Snowflake data modeling approach is designed for BI queries?

How can the Snowpipe REST API be used to keep a log of data load history?

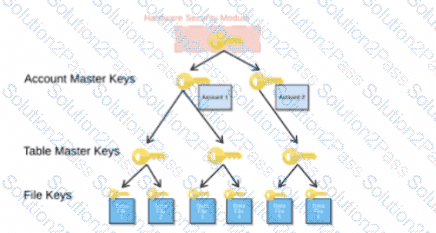

When activating Tri-Secret Secure in a hierarchical encryption model in a Snowflake account, at what level is the customer-managed key used?

What built-in Snowflake features make use of the change tracking metadata for a table? (Choose two.)

An Architect has a VPN_ACCESS_LOGS table in the SECURITY_LOGS schema containing timestamps of the connection and disconnection, username of the user, and summary statistics.

What should the Architect do to enable the Snowflake search optimization service on this table?

You are a snowflake architect in an organization. The business team came to to deploy an use case which requires you to load some data which they can visualize through tableau. Everyday new data comes in and the old data is no longer required.

What type of table you will use in this case to optimize cost

What are characteristics of Dynamic Data Masking? (Select TWO).